Hierarchical Prompting Taxonomy (HPT)

A Universal Evaluation Framework for Large Language Models Aligned with Human Cognitive Principles

Overview

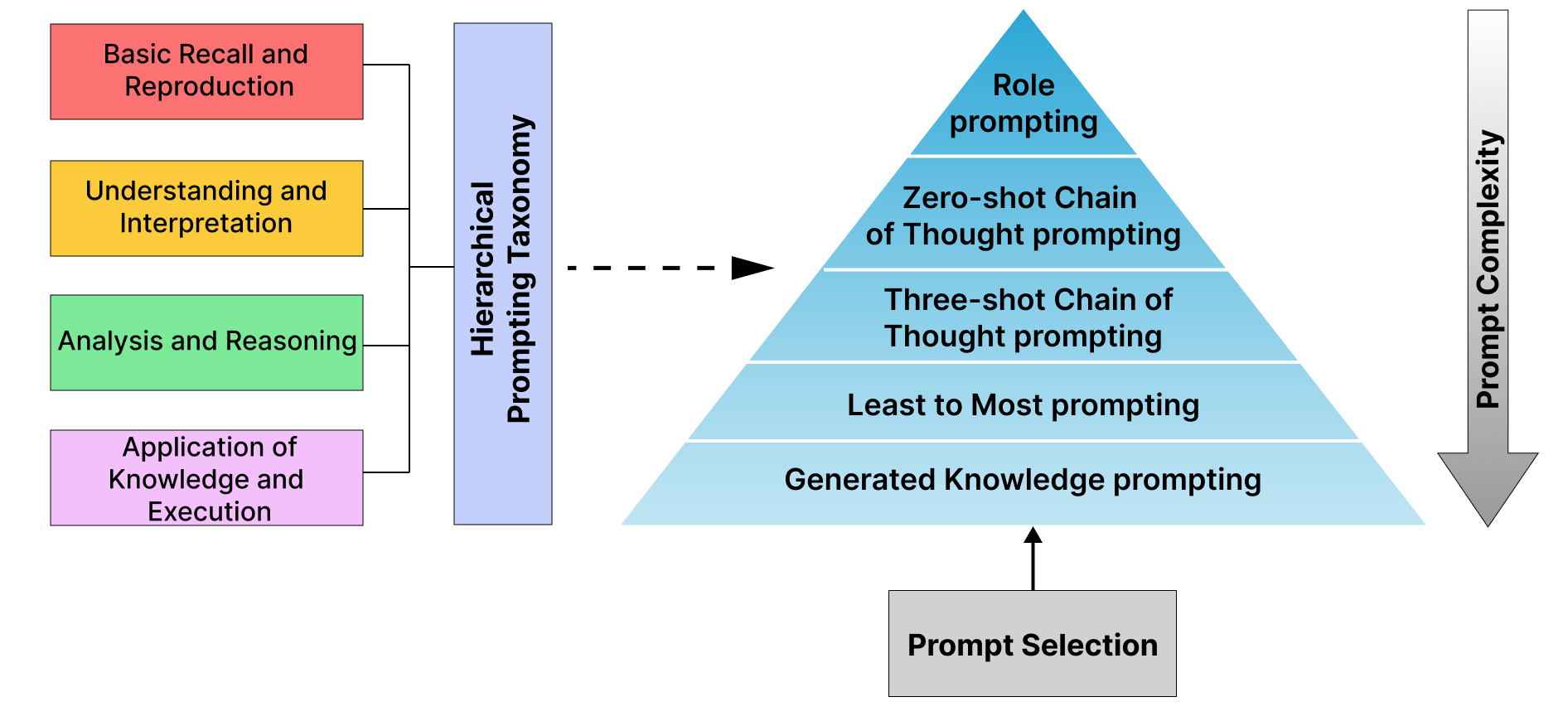

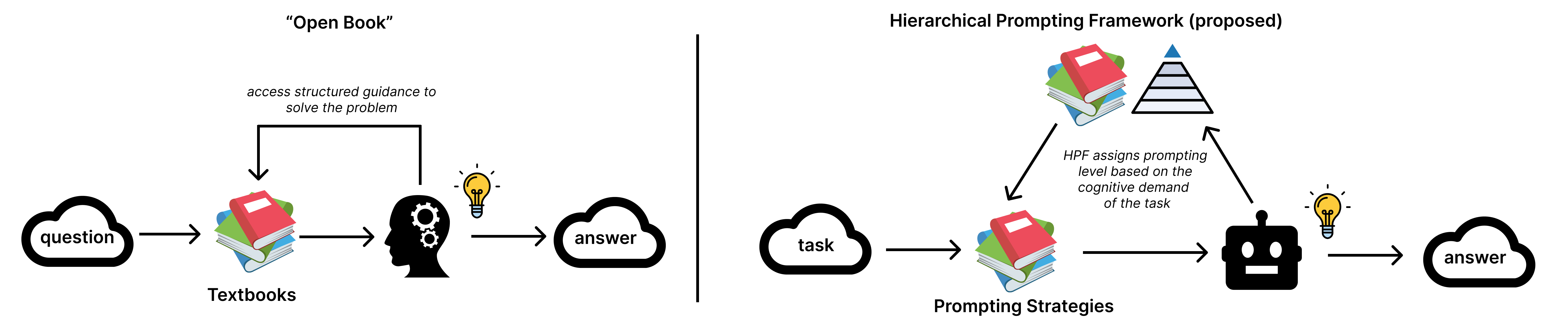

Hierarchical Prompting Taxonomy (HPT) is a universal evaluation framework for large language models (LLMs), grounded in human cognitive principles. HPT introduces the Hierarchical Prompting Framework (HPF), which structures five unique prompting strategies in a hierarchy based on their cognitive demands. The framework also proposes the Hierarchical Prompting Index (HPI) to quantify the complexity of tasks and the cognitive competencies of LLMs across diverse datasets.

HPT enables comprehensive evaluation of LLMs' problem-solving abilities and dataset intricacy, providing a standardized metric for task complexity. Experiments show HPF can enhance LLM performance by 2% to 63% compared to baselines, with GSM8k identified as the most cognitively complex task among those tested.

Authors: Devichand Budagam, Ashutosh Kumar, Mahsa Khoshnoodi, Sankalp KJ, Vinija Jain, Aman Chadha

Technologies Used

Features & Functionality

- Hierarchical Prompting Framework (HPF) with five unique prompting strategies

- Hierarchical Prompting Index (HPI) for quantifying task complexity

- Evaluation of LLMs' cognitive competencies across diverse datasets

- Standardized metric for dataset and task complexity

- Open-source implementation for reproducibility

Challenges & Learnings

The main challenge was designing a framework that meaningfully aligns LLM evaluation with human cognitive principles, and developing metrics that are both interpretable and robust across tasks and models.

This project deepened my understanding of prompt engineering, cognitive science, and the evaluation of large language models in NLP.